Summary

Below is the list of projects in which I worked during my PhD.

The UrbanVision System

|

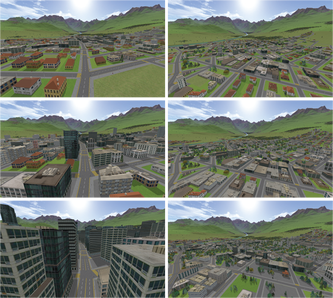

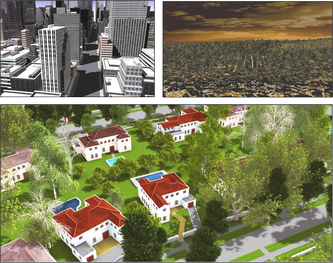

Overview. UrbanVision is an open source software system for visualizing alternative land use and transportation scenarios at scales ranging from large metropolitan areas to individual neighborhoods. The motivation behind this system to fill the gap between the outputs of existing land use and transportation models and the automatic generation of 3D urban models and visualizations. The project is a collaborative effort between University of California Berkeley and Purdue University, led by Prof. Paul Waddell (Berkeley) and by Profs. Daniel Aliaga and Bedrich Benes (both at Purdue). The initial system is deployed to the San Francisco Bay Area CA, spanning over 7 million people and 1.5 million parcels of land. However, we anticipate deployment to other national and international cities in the future. Altogether, the system’s capabilities provide valuable assistance in the difficult task of engaging the public and key local stakeholders in evaluating alternative approaches to attaining more sustainable communities, achieving consensus on the policies and plans to implement.

|

|

Building Reconstruction

|

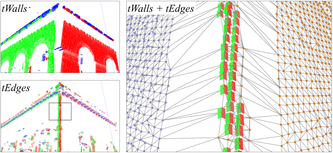

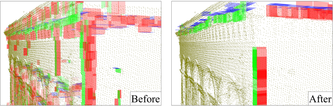

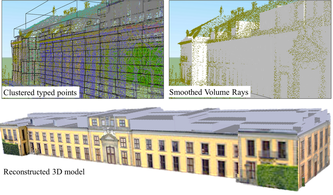

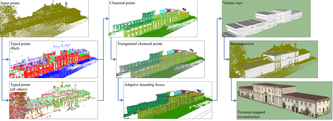

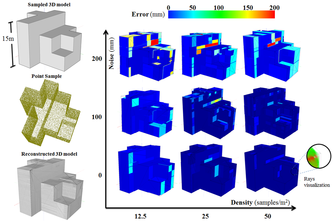

Automatic Extraction of Manhattan-World Building Masses from 3D Laser Range Scans, Carlos A. Vanegas, Daniel G. Aliaga, Bedrich Benes, IEEE Transactions on Visualization and Computer Graphics, 2012.

Abstract. We propose a novel approach for the reconstruction of urban structures from 3D point clouds with an assumption of Manhattan World (MW) building geometry; i.e., the predominance of three mutually orthogonal directions in the scene. Our approach works in two-steps. First, the input points are classified according to the MW assumption into four local shape types: walls, edges, corners, and edge-corners. The classified points are organized into a connected set of clusters from which a volume description is extracted. The MW assumption allows us to robustly identify the fundamental shape types, describe the volumes within the bounding box, and reconstruct visible and occluded parts of the sampled structure. We show results of our reconstruction that has been applied to several synthetic and real-world 3D point datasets of various densities and from multiple viewpoints. Our method automatically reconstructs 3D building models from up to 10 million points in 10 to 60 seconds.

|

|

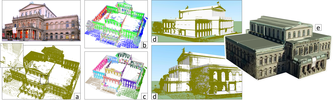

Building Reconstruction using Manhattan-World Grammars, Carlos A. Vanegas, Daniel G. Aliaga, Bedrich Benes, IEEE Computer Vision and Pattern Recognition (CVPR), 2010.

Abstract. We present a passive computer vision method that exploits existing mapping and navigation databases in order to automatically create 3D building models. Our method defines a grammar for representing changes in building geometry that approximately follow the Manhattan-world assumption which states there is a predominance of three mutually orthogonal directions in the scene. By using multiple calibrated aerial images, we extend previous Manhattan-world methods to robustly produce a single, coherent, complete geometric model of a building with partial textures. Our method uses an optimization to discover a 3D building geometry that produces the same set of façade orientation changes observed in the captured images. We have applied our method to several real-world buildings and have analyzed our approach using synthetic buildings.

|

|

Design and editing of 3D Urban Spaces

|

Inverse Design of Urban Procedural Models

Carlos A. Vanegas, Ignacio Garcia-Dorado, Daniel Aliaga, Bedrich Benes, Paul Waddell ACM Transactions on Graphics (also in Proceedings SIGGRAPH Asia), 31(6), 2012 Abstract. We propose a framework that enables adding intuitive high-level control to an existing urban procedural model. In particular, we provide a mechanism to interactively edit urban models, a task which is important to stakeholders in gaming, urban planning, mapping, and navigation services. Procedural modeling allows a quick creation of large complex 3D models, but controlling the output is a wellknown open problem. Thus, while forward procedural modeling has thrived, in this paper we add to the arsenal an inverse modeling tool. Users, unaware of the rules of the underlying urban procedural model, can alternatively specify arbitrary target indicators to control the modeling process. The system itself will discover how to alter the parameters of the urban procedural model so as to produce the desired 3D output. We label this process inverse design.

|

|

|

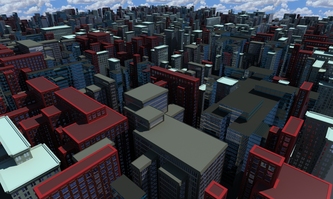

Interactive Design of Urban Spaces using Geometrical and Behavioral Modeling, Carlos A. Vanegas, Daniel G. Aliaga, Bedrich Benes, Paul Waddell, ACM Transactions on Graphics (Proceedings SIGGRAPH Asia), 28(5), 2009.

Abstract. The main contribution of this work is in closing the loop between behavioral and geometrical modeling of cities. Editing of urban design variables is performed intuitively and visually using a graphical user interface. Any design variable can be constrained or changed. The design process uses an iterative dynamical system for reaching equilibrium: a state where the demands of behavioral modeling match those of geometrical modeling. 3D models are generated in a few seconds and conform to plausible urban behavior and urban geometry. Our framework includes an interactive agent-based behavioral modeling system as well as adaptive geometry generation algorithms. We demonstrate interactive and incremental design and editing for synthetic urban spaces spanning over 200 square kilometers.

|

|

State-of-the-Art in Urban Modeling

|

Modeling 3D Urban Spaces Using Procedural and Simulation-Based Techniques

Peter Wonka, Daniel G. Aliaga, Carlos A. Vanegas, Pascal Müller, Michael Frederickson, ACM SIGGRAPH Course, 2011. Abstract. This course presents the state‐of‐the‐art in urban modeling, including the modeling of urban layouts, architecture, image‐based buildings and façades, and urban simulation and visualization. Digital content creation is a significant challenge in many applications of computer graphics. This course will explain procedural modeling techniques for urban environments as an important complement to traditional modeling software. Attendees of this course will learn procedural techniques to efficiently create highly detailed three-dimensional urban models.

|

Course Website |

|

Modeling the Appearance and Behavior of Urban Spaces, Carlos A. Vanegas, Daniel G. Aliaga, Peter Wonka, Pascal Mueller, Paul Waddell, Benjamin Watson,

Computer Graphics Forum, 29(1),25-42. Also in Eurographics 2009 STAR (State-of-the-Art Report), 2010. Abstract. Urban spaces consist of a complex collection of buildings, parcels, blocks and neighborhoods interconnected by streets. Accurately modeling both the appearance and the behavior of dense urban spaces is a significant challenge. The recent surge in urban data and its availability via the Internet has fomented a significant amount of research in computer graphics and in a number of applications in urban planning, emergency management, and visualization. In this article, we seek to provide an overview of methods spanning computer graphics and related fields involved in this goal. Our article reports the most prominent methods in urban modeling and rendering, urban visualization, and urban simulation models. A reader will be well versed in the key problems and current

solution methods. |

Some images courtesy of co-authors

|

Visualization of Urban Simulation

|

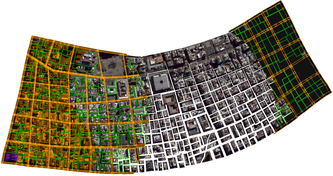

Visualization of Simulated Urban Spaces: Inferring Parameterized Generation of Streets, Parcels, and Aerial Imagery, Carlos A. Vanegas, Daniel G. Aliaga, Bedrich Benes, Paul Waddell, IEEE Transactions on Visualization and Computer Graphics, 15(3):424-435, 2009.

Abstract. We build on a synergy of urban simulation, urban visualization, and computer graphics to automatically infer an urban layout for any time step of the simulation sequence. In addition to standard visualization tools, our method gathers data of the original street network, parcels, and aerial imagery and uses the available simulation results to infer changes to the original urban layout. Our method produces a new and plausible layout for the simulation results. In contrast with previous work, our approach automatically updates the layout based on changes in the simulation data and thus can scale to a large simulation over many years. The method in this article offers a substantial step forward in building integrated visualization and behavioral simulation systems for use in community visioning, planning, and policy analysis.

|

|

Design and Editing of 2D Urban Layouts

|

Procedural Generation of Parcels in Urban Modeling, Carlos A. Vanegas, Tom Kelly, Basil Weber, Jan Halatsch, Daniel Aliaga, Pascal Müller,

Computer Graphics Forum (Proceedings Eurographics), 2012 We present a method for interactive procedural generation of parcels within the urban modeling pipeline. Our approach performs a partitioning of the interior of city blocks using user-specified subdivision attributes and style parameters. Moreover, our method is both robust and persistent in the sense of being able to map individual parcels from before an edit operation to after an edit operation - this enables transferring most, if not all, customizations despite small to large-scale interactive editing operations. The guidelines guarantee that the resulting subdivisions are functionally and geometrically plausible for subsequent building modeling and construction. Our results include visual and statistical comparisons that demonstrate how the parcel configurations created by our method can closely resemble those found in real-world cities of a large variety of styles. By directly addressing the block subdivision problem, we intend to increase the editability and realism of the urban modeling pipeline and to become a standard in parcel generation for future urban modeling methods.

|

|

|

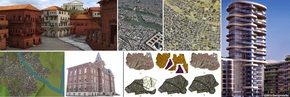

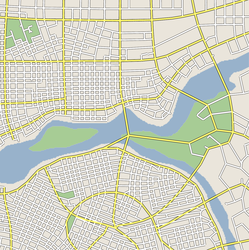

Interactive Example‐Based Urban Layout Synthesis, Daniel G. Aliaga, Carlos A. Vanegas, Bedrich Benes, ACM Transactions on Graphics (Proceedings SIGGRAPH Asia),27(5), 2008.

Abstract. We present an interactive system for synthesizing urban layouts by example. Our method simultaneously performs both a structure-based synthesis and an image-based synthesis to generate a complete urban layout with a plausible street network and with aerial-view imagery. Our approach uses the structure and image data of real-world urban areas and a synthesis algorithm to provide several high-level operations to easily and interactively generate complex layouts by example. The user can create new urban layouts by a sequence of operations such as join, expand, and blend without being concerned about low-level structural details. Further, the ability to blend example urban layout fragments provides a powerful way to generate new synthetic content. We demonstrate our system by creating urban layouts using example fragments from several real-world cities, each ranging from hundreds to thousands of city blocks and parcels.

|

|

|

Interactive Reconfiguration of Urban Layouts, Daniel G. Aliaga, Bedrich Benes, Carlos A. Vanegas, Nathan Andrysco, IEEE Computer Graphics and Applications (Special Issue on Urban Modeling), 28(3):38‐47, 2008.

Abstract. The ability to create and edit a model of a large-scale city is necessary for a variety of applications. Although the layout of the urban space is captured as images, it consists of a complex collection of man-made structures arranged in parcels, city blocks, and neighborhoods. Editing the content as unstructured images yields undesirable results. However, most GIS maintain and provide digital records of metadata such as road network, land use, parcel boundaries, building type, water/sewage pipes and power lines that can be used as a starting point to infer and manipulate higher-level structure. We describe an editor for interactive reconfiguration of city layouts, which provides tools to expand, scale, replace and move parcels and blocks, while efficiently exploiting their connectivity and zoning. Our results include applying the system on several cities with different urban layout by sequentially applying transformations.

|

|